#unity reference prefab in script

Explore tagged Tumblr posts

Text

Through Yourself: Dev Log Week 1-Week 3

Due to just making this page I will go over what has been done in the last three weeks:

Completion of version 1.0 of the GDD of 'Through Yourself' completed; ensured that this project is small enough to be done with a small team, but rich in story

Reference images added to GDD

Started Unity project; added a textured ball, a camera, and pill for testing purposes on a flat, colored plane

Created basic scripting for movement (FPSMovement)

Created basic scripting for camera control (FirstPersonCamera)

Created basic scripting for picking up objects (HoldObjects which became HoldingObjects later)

Revised movement and camera control scripts to work better with the placeholder 'Player' object as well as picking up objects

Added Object Rotation to HoldingObjects script; removed old script from textured ball placeholder and added new HoldingObjects script to Player prefab

Tuned object movement when on pick-up to come to the hand smoother; further refined FPSMovement and FirstPersonCamera to more easily work with the new HoldingObjects script

Started work on making objects snap to specific places

I am aware that this is not a whole lot at first glance, but I have a vision, and am determined to see it through. I do hope you all take a chance on this, and watch as it progresses.

---Melodania

#game development#gaming#lgbtq#video games#unity#trans unity#unity3d#original story#short story#fiction#stories#transgender#lgbt pride#trans pride#lgbt#lgbtqia#narrative#coding#programming#developer#game design

20 notes

·

View notes

Text

Progress with damage system

Quite a big breakthrough for my humble self! After watching a ton of vids on Events and Delegates in Unity I made a basic foundation for handling events like damaging enemies in a way that's modular and (hopefully) scalable. Before, I had an issue with damage being propagated to every copy of an enemy prefab on the map. That sucked, and I researched options of isolating affected enemies from the rest. What seemed like a good option was using Hash Sets - unordered lists of unique objects. Enemy intersects my hitbox and is added to the list. If it leaves the hitbox it is removed from the list. Upon attack only enemies in the list would receive damage. Here's preview of a counter working as intended - player stores number of affected cubes inside the hit radius. (In the console is a scrolling list of named cubes currently entering or exiting the list.)

Very cool! And also very wrong and unnecessary. The bugs this would eventually create are unfathomable. Didn't help me much, but I keep the hash idea for later. I suspected the issue was in assignment of an object to receive damage, not the hitbox, so I scrapped the HashSet idea. After some fiddling I found solution in slightly changing how damage is applied to the object. Turns out getting reference of affected object via player's attack script worked perfectly. I'm not sure why GetComponent feels like a bad thing, it's an irrational negative bias, but it works. Scan for instances of anything tagged as "enemy", assign it a temporary variable, and make it take damage via event stored in the enemy's script.

So it seems like it's fixed now (within confines of an empty testing scene). Now I can apply damage only to enemies affected by relevant hitboxes. With this I'll try adding it to my melee and ranged modes - slashing with sword and blasting spells. I've also added kill counter and sum of dealt damage to this prototype for fun:

I want to make a presentable prototype soon :)

2 notes

·

View notes

Text

Admit One Dev Blog: Update 1

Did some work todaaay on Laaabour Dayyy Today I did some work on learning how use animation notifications and animation blending to allow the Player to draw an equipped weapon in Unreal Engine. One of the learning resources I've been using is this Udemy course here (if you're interest in grabbing it, it might worth while to wait until it's on sale, since Udemy sales occur pretty frequently). While it's likely I'll rip out and replace much of what I'm doing with this tutorial series (or have to reconnect a lot of things once I have custom art and animations) I still feel like running through this series has been helpful since I'm pretty new to Unreal (much more experienced in Unity and another now defunct game engine) and have been learning a lot.

This leads me to: ================================================ THE UNREAL LEARNING CORNER: Part 1 Whenever this segment shows up here I'll likely talk about some problem I've ran into and explain how I fixed it. None of these will be "be all end all" solutions but hopefully the record of these mishaps will be useful for someone, it will also be a good place to show off any funny bugs I may run into. The things I have today are pretty dry though. LET THE PARENT SKELETAL MESH CONTAIN THE MESH

So a Actor or Character Blueprint (a kind of prefab in UE that can also contain Visual Scripting Code) can contain a Skeletal Mesh component. In early effort to organize how the Player Character and Customization would be set up (would like there to be Character Customization, no grantee of that in a year though) I wanted to place all Meshs (art/models) underneath one parent in a attempt to segment up where all the clothing and other assets would be placed. I've basically achieved a similar goal by using a "Child Actor" (R and LFoot above). While it remains to be seen if that will completely work, I'm at least confident letting the Parent Mesh actually contain the Player's model prevents these two issues below.

Unreal when trying to reference the Mesh of a Blueprint will grab the uppermost Mesh in it's personal hierarchy by default, so without that Get Child Component node, there's no way it could actually effect my character in the way I wanted it to, in this case attaching a sword to their side. While kind of annoying to add another node, this wouldn't be so bad, however:

I couldn't find a way to access the child model and tell it to perform an animation. There may be a way to do this but ultimately just making the parent Skeletal Mesh actually contain the Skeletal Mesh seems to be the way to go so to avoid having to filter through what's supposed to be being affected. TRIGGERED VS STARTED Also you can see above that I'm activating the Anim Montage with the Triggered type of input detection(?), I haven't had the best luck with that just yet, I think it's for actions you're meant to hold down but Ongoing could also be used for that? Unsure. Regardless I recommend using Started for something you just want to hit once like a button press. Using Triggered here was actually causing a variable to change itself four times per button press which resulted in some inconsistent behavior and confusion.

================================================ So a bit simple today but I'm hoping these will steadily get more exciting as we go along. Thanks for reading!

2 notes

·

View notes

Text

Unity Game Studios: Best Practices for Efficient Game Development

Unity has become one of the most popular game engines for developers of all sizes, offering powerful tools and a flexible workflow that streamline game development. However, maximizing efficiency in game development requires more than just using the right tools; it involves adopting best practices that optimize workflows, enhance collaboration, and ensure high-quality outcomes. In this blog, we will explore best practices for efficient game development using Unity, helping you and your team create games more effectively and efficiently.

1. Plan and Pre-Production

Define Clear Objectives and Scope

Before diving into development, it’s essential to have a clear understanding of your game’s objectives, target audience, and scope. Define the core mechanics, features, and narrative elements. Create a game design document (GDD) that outlines these aspects in detail. This document will serve as a reference throughout the development process, ensuring everyone on the team is aligned.

Create a Prototype

Building a prototype early in the development process helps validate your game’s core mechanics and concepts. Unity’s rapid prototyping capabilities allow you to quickly test and iterate on your ideas. A prototype can reveal potential issues and provide valuable insights that inform subsequent development stages.

2. Optimize Workflow and Organization

Use Version Control

Implementing version control is crucial for managing your project’s files and codebase. Tools like Git and services like GitHub or Bitbucket enable collaborative development, track changes, and prevent data loss. Unity game studios integrates well with these tools, making it easy to manage and sync project updates across the team.

Organize Your Project Structure

Maintain a well-organized project structure to enhance efficiency and readability. Create a consistent folder hierarchy for assets, scripts, scenes, and other project elements. Unity’s Asset Store offers templates and tools that can help standardize your project structure.

Leverage Prefabs and Asset Reusability

Use prefabs to create reusable game objects and components. Prefabs allow you to manage and update multiple instances of an object from a single source, saving time and reducing errors. Organize your prefabs in a dedicated folder for easy access and management.

3. Optimize Performance

Efficient Asset Management

Optimize your game assets to ensure smooth performance across different platforms. Use appropriate levels of detail (LOD) for models, compress textures, and manage polygon counts. Unity’s Profiler tool can help identify performance bottlenecks related to assets.

Script Optimization

Efficient scripting is key to maintaining performance. Avoid using expensive operations in update loops, and use object pooling to manage frequently instantiated and destroyed objects. Profiling tools in Unity can help monitor and optimize your scripts’ performance.

Manage Memory Usage

Monitor and manage memory usage to prevent crashes and slowdowns. Use Unity’s memory profiler to identify memory leaks and optimize asset loading and unloading. Implement efficient garbage collection practices to minimize runtime memory overhead.

4. Enhance Collaboration

Use Collaboration Tools

Effective collaboration tools are essential for efficient teamwork. Tools like Unity Collaborate, Slack, Trello, and Asana facilitate communication, task management, and project tracking. Unity Collaborate allows team members to sync their work and resolve conflicts easily.

Regular Team Meetings and Reviews

Conduct regular team meetings to discuss progress, address challenges, and plan next steps. Code reviews and playtesting sessions provide opportunities for feedback and quality assurance, ensuring that the project stays on track and maintains high standards.

5. Testing and Quality Assurance

Automated Testing

Implement automated testing to catch bugs and ensure stability. Unit tests, integration tests, and performance tests help verify that your game’s components work as intended. Unity Test Framework and other testing tools can streamline this process.

Regular Playtesting

Frequent playtesting is crucial for identifying gameplay issues and improving user experience. Gather feedback from diverse testers to uncover different perspectives and potential improvements. Iterate on feedback to refine gameplay mechanics and polish the overall experience.

6. Documentation and Knowledge Sharing

Comprehensive Documentation

Maintain thorough documentation for your project, including code comments, development guidelines, and workflow processes. Well-documented projects are easier to manage, especially when onboarding new team members or revisiting the project after a hiatus.

Knowledge Sharing

Foster a culture of knowledge sharing within your team. Regularly share tips, best practices, and lessons learned through meetings, internal forums, or documentation. Encourage team members to contribute to shared knowledge bases and learn from each other’s experiences.

7. Continuous Learning and Improvement

Stay Updated with Unity’s Features

Unity is constantly evolving, with regular updates introducing new features and improvements. Stay informed about the latest developments by following Unity’s official blog, forums, and release notes. Incorporate new features and best practices into your workflow to stay competitive and efficient.

Invest in Training and Development

Encourage continuous learning through training and professional development opportunities. Attend Unity workshops, webinars, and conferences to stay current with industry trends and advancements. Investing in your team’s skills and knowledge pays off in the long run by enhancing overall efficiency and innovation.

Conclusion

Efficient game development with Unity requires a combination of planning, optimization, collaboration, and continuous improvement. By following these best practices, you can streamline your workflows, enhance team productivity, and create high-quality games that stand out in the competitive market. Unity’s powerful tools and supportive community provide a solid foundation, but it’s the application of these best practices that truly revolutionizes the development process. Embrace these strategies to maximize your potential and achieve success in your game development endeavors.

1 note

·

View note

Text

Blog Post #3 (Feb. 15th)

Over the past 2 weeks, the team began development of the game using Unity, completing a cumulative prototype for the first milestone. This included both exploration and management activities, as well as implementing early visuals for UI.

I was tasked with creating the shop scene which facilitates the management portion of our game. This included the angled top-down view where players move as the avatar-based herbalist, and then interact with a workstation to enter an herb mixing and grinding sequence. An NPC would also walk in to place an order to prompt the player for which herbs they want in their mix.

youtube

My first rendition of this is seen above, where the NPC walks in, places the order with three of the existing herb. The player can interact with a work station, leading to a drag & drop herb activity. Once herbs are in the mortar, the player can press the space bar to simulate grinding the herbs.

youtube

After further development in the first week, I took visuals from the artists Bea & Zynab to implement into the game and added the temporary prototype main menu and tutorial screens. Additionally, while the other developer Ilyas worked on the exploration scene, I made a temporary mini-world to test the collectible herb Prefabs that I made. The grinding activity was reviewed by the team, and we decided to make it more kinaesthetic instead, having the player physically grind the herbs to add immersive simulation. The NPC now also gave a response to the order, and would check whether or not you got it right.

youtube

By the second week, I further finalized the game to prepare for playtesting as I added two additional herb types and random NPCs order. This helped add challenge to the prototype, making it feel more like a complete game. The NPC would return in an endless loop after completing each order with a new prompt each time.

The last step was combining the scenes I created with the outdoor exploration scene Ilyas created, which utilized the collectible herbs prefabs I made, as well as moving the shop entry point to a temporary graphic by Bea. I ended the week by playtesting, as well as playing around with Timeline in Unity as we will need to create multiple cutscenes to implement narrative beats.

The team has not encountered any issues thus far. We are working collaboratively, as developers are utilizing GitHub to work simultaneously on different scripts and scenes, and artists are creating graphics and designing UI, sharing completed ones to developers. My personal challenge thus far has been learning the Unity interface and functionalities, as it is my first time working with the game engine. With my experience in Java & Processing, I can quickly transfer my coding knowledge to C# and properly use scripts which I learned while coding with Lua. I will continue to develop my skills by self-learning through reference documentation and video tutorials.

For the following weeks, I will improve some interaction bugs, such as keypresses not registering properly, as well as optimizing my existing scripts. I will be further developing the shop scene with updated graphics and starting to define more complex NPC behavior as we make official levels for our game.

0 notes

Link

0 notes

Video

youtube

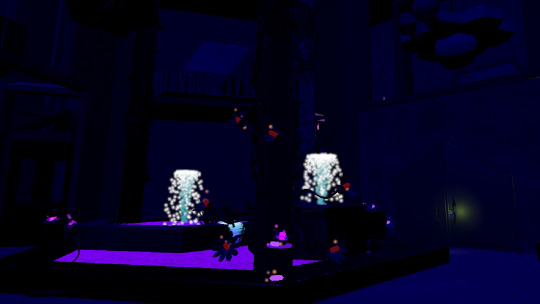

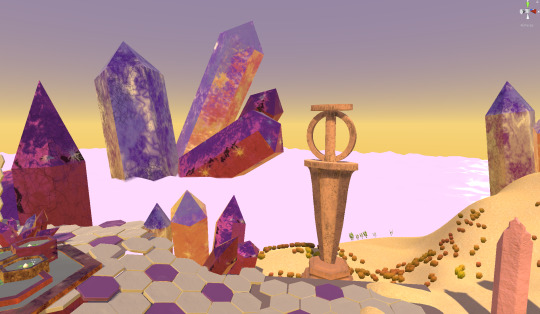

This project is a testing prototype that I have used to test various mechanics, unity features, data types, etc. Some I test I already knew (e.g. player moves forward and back rotate), others I learned (e.g., loading from “resources” and activating other game objects animators from code respectfully). The goal of the project is to test out unfamiliar essentials to improve my C# programming and general unity engine knowledge. I created this little level with 3d tool kits modular prefabs and particles as a start to testing.

WHAT I AM DEMONSTRATING:

• Player movement and its abilities to shrink and regrow, change color, and shoot randomized size lava balls.

• Raycast mechanics such as spawning rocks, moving the player cube, changing rock colors and detecting certain objects like “items”.

• Each Trigger has a distinctive behavior that will respond when the player enters their trigger such as camera triggers, light trigger, animation trigger, etc.

• Speaking of camera triggers, each camera trigger can be triggered by the player to be idle, follow, move or both (except idle).

• Creating a mesh from code using a certain number of UVs, vertices’, tri’s, etc.

• Loading objects from resources without getting a public reference to the game object.

• Creating an object by code.

• Appling gravity to a game object that they are stuck on a sphere as if they were on a planet like Earth.

• Minimap follows and rotates with the player, also can be expanded fullscreen.

• Particle activates on certain game objects if the player collides with that object.

WHAT I LEARNED:

--Player Mechanics--

• Changing player cube size with transform.local scale by inputs.

• When instantiating lava balls, changing the game object’s name to “sphere” instead of “sphere clone” and create a random range of Vector 3.one size scales: meaning it will spawn each of random sizes.

• Making Lava balls use force mode acceleration to ignore their mass, so it doesn’t slow down or speed up depending on the mass.

• 2 ways to write Vector3.one.

• Changing player cubes colour by <Render> material.color by inputs.

• Able to instantiate dust particles with a certain wall from the player cubes rotation/angle with the quaternion.Identity with its transform. Position.

--Camera manager and Triggers—

• Made two separate scripts that can smoothly follow or look at at the player depending on which camera trigger the player enters. This is done with Vector3s, look at, quaternions, lerp, and slerp depending on either script. It can offset the camera if need too.

• Using Camera triggers to store a reference to the Camera Managers camera states to control which state of the 4 to execute specific code. Depending on the selected public state when the player cube enters the trigger, the camera will switch states ( more info next sentence).

• Creating a camera Manager that controls the current Camera behavior states such as Idle, follow, look at, or both follow and look at by using switch states with functions.

• Creating a minimap for the first time ever by adding a second camera above the player and rendering a certain distance below. The minimap can follow the player cube by moving and rotating with a vector 3 and Quaternion. Eular respectfully.

• Making the minimap expand fullscreen by pressing a button M and shrinking to its default size once M is pressed again using the Camera rect.

• Activating another game objects the animator to play a specific animation bool when the player’s cube enters a trigger referencing that Animator. Also playing a different animation if the player's cube leaves the animator trigger.

• After entering a door Trigger, use a lean tween animation to smoothly open the door downwards for only 2 seconds. Lean tween also bypasses complex datatypes like Lerps.

--Raycasting and Mouse events--

• Raycasting from a camera onto the collider (ground) to get the player cube to move to a new position wherever the player has clicked on while still making sure it's on the same y.position while moving.

• Using the rigid body’s method of movePosition that allows the player cube to move into a new position once the player has clicked on that position.

• Making the player cube follow the mouse cursor to move to a new position on the ground by aiming it from the camera (cam,ScreenPointToRay(inputPosition)), basically by player's cursor.

• How raycasts store info on what they hit.

• How certain game objects can be detected by the raycast when checking its name by hit.collider.name. Also scaling it with transform.Localscale by the same raycast.

• Using an Array of what and storing the data of what the raycast hits based on its game object name in the console and turning certain game objects green if they have a renderer on their parent game object.

• How to spawn rocks from the camera raycast when the mouse is clicked on a collider.

• How Mouse events are not based on cursor design but only on what information the mouse touches or hovers over.

• The difference between OnMouse Enter, Exit, and Down and a few others like drag and over.

--Other Mechanics and misc--

• Lerps and slerps are good for moving game objects without a rigid body.

• The Resources datatype can find game objects in a Resources folder by a Resources.load code and load them into the scene without using a public game object reference.

• Resources.FindObjectsOfTypeAll can be used to locate assets and scene objects.

• Turning the Player cube's gravity off and some constraints when their position is on a sphere (like a planet).

• How Fixed update is good for rigid bodies- particularly for movement.

• How the player's gravity’s attractor can attract on the sphere it updates its movement regardless of moving or not so it does not fall off the sphere.

-Creating Mesh and objects--

• Creating mesh by code by first adding components of Mesh filter, Mesh Renderer, and clearing the mesh empty. Then adding vertices, UV’s, and Triangles by using arrays with Vector 3,s Vector 2s, and ints all by code.

• Creating Quads by code by determining array vertices of vector 3s of quad height and quad weights, tri’s by array ints to set on the quad, normal’s by array vector3’s, UV’s by Vector2’s and then assigning them to the quad.

• Getting the created quad to move back and forth by using a While loop to move its vertices and normals by multiplying with Mathf.Sin (Time. time).

• Creating a new game object by code by first giving it a name, and mesh type such as a sphere, assigning it to a position, then adding rigidbody. All without assigning it in Inspector before pressing play.

WHAT COULD I DO BETTER?

• creating mesh by code was very complicated, if there is a time when I need to use those mechanics again for my future games, I may need to do some more research on how to make all sorts of unique mesh like the default Unity 3D primitives.

• The raycast checker on the player cube detected camera triggers, making it difficult to detect the “item” by raycast, I will need to find out other ways camera triggers can detect the player without getting in the way of the raycast.

• When the dust particle appears from the cube wall collision, it should play in the same rotation position as the cube instead of just one rotation.

• At the moment I could not get the dust particles to instantiate in the right rotation when colliding with certain walls, next time I will need to find out how to make them do so consistently.

OVERALL:

It was an interesting exercise. I got a better understanding of Unity, datatypes, and making mechanics. This will make my game dev journey much easier since I understand the code a lot better. Hopefully, I can do something like this again to know more advanced and expert essentials.

#pc #GameDesigner #Mechanic Test #Unity3D #gamedevelopment #gamedev #UnityEngine #IndieGames #AustralianGameDevoloper #indiedev #indiegame #indie #indiedev #Gamedev #IndieGames #SoleDevoloper #indiegaming #IndieGameDev #indiegames

0 notes

Text

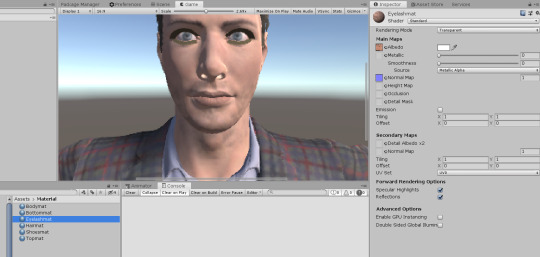

Adobe fuse unity3d materials

I don't know.īut the numerals 4 and 7 NEVER APPEAR TOGETHER, IN QUOTES, out of quotes, EVER, in this script as a case statement. Is the only way a NUMBER 47 MIGHT APPEAR. Yesterday, I spent 4 hours trying to get a camera to switch from cockpit to flight view, on a simple bool, yes or no., back and forth I went, debug logging, yelling, slamming my keyboard, not realizing, I had set that to always be true, somewhere earlier in my code, I am a idiot sometimes!.anywayĭebug.Log("texBase.format" + texBase.format) It hard, intimidating at first, even after 10 years, I screw up. Scared to open a text editor, and delete a case statement? It is in english, and is telling you what to do! This a free script, its your responsibility, no one else's. You need to learn, the basics of coding 101, you don't know enough, to even debug that? stop, learn, try again. The quoted code is not within my script so I'm at a loss. Your shader looks great so I'm sure it already does the right thing here, on-the-fly, without need to change the texture I have not seen this assertion, so cannot reproduce the problem to attempt a fix. The script does this in MixamoAssetProcessor::OnPostprocessTexture() by setting alpha to (1 - alpha). After the import crashes, the files are still there and can be manually attached to the material, and manually set the material Rendering Mode to Opaque (this works for all texture component on this model except the eyelashes - packing makes it impossible to set the two modes required to make both body and eyelash textures The metallic/smoothness textures need their alpha channel to be inverted to work correctly. The texture expected names are not being found, rather the textures are all now packed into a single texture. It appears you've exported the object, modified the character, and importantly changed the textures - which is breaking the script assumptions. and a single I've looked at the provided gunman.zip and can repro the crash. It really is just "as-is", and is somewhat brittle code that worked only for characters pre-supplied within the Adobe Fuse program (male/female fit/scan 1,2,3. This script wasn't released in any officially supported way, however. by copying the imported character folder to another computer, or pulling commits of characters from Collaborate.

Update to fix problem when textures are reimported e.g.

Updated to correctly handle non-alpha hair.

Updated to handle masks (partially), gloves, shoes, hats.

Set smoothness per material to roughly match visuals in Adobe Fuse CC (beta).

Set texture import settings correctly (alpha channel, normal maps).

Correct the MetallicAndSmoothness map (has inverted alpha).

Create new materials for Eyes and Eyelashes.

Set shader render modes correctly per material.

Most solutions say to simply set the shader render modes to Opaque or Transparent/Fade, but this is only part of the solution this script performs: This results in importing the character to Assets/Mixamo// and a prefab is created that references the updated materials.

In Unity, click the menu Mixamo -> Import from Fuse with exported Unity textures.

Save the Mixamo FBX file beside the exported textures folder.

Download the rigged character as a Unity FBX file.

From Fuse select File -> Animate with Mixamo.

Export textures for Unity 5 into a new folder with the same name as the character.

Create a model using the Adobe Fuse application.

Now the script should exist as Assets/Mixamo/Editor/MixamoUnityImporter.cs.

Create a folder within Mixamo called Editor.

Within Unity create folder called Mixamo.

Using this script the results are quite close to the quality within the Fuse window e.g.

The textures within the FBX files are not ideal for use in Unity. It is designed to take a character that was made in Fuse, and uses the textures that Fuse exports that are designed for use with Unity. Note, the attached script is not designed to work with characters downloaded direct from the Mixamo store. Without this script the imported character materials are all broken. I have made a script that imports characters from Adobe Fuse CC (Beta) and makes the necessary modifications to the materials and texture maps.

0 notes

Text

Week 11: Lectures & Readings

It was my final lecture for IGB220 today, which is a bit sad because this has been my favourite unit this semester, but I guess on the bright side, no more driving home in 5pm traffic this semester. Dr. Conroy gave the class a really great review of what is to come and a nice technical tour of some of the software to come. We discussed the likes of unity and unreal and their purpose in the industry and beyond. It was nice to have a bit of exposure to unity and not be totally in the dark, unlike in my coding classes.

I got to learn about prefabs and handy actions like linking scripts and making structures. I think I learnt the most from this lecture. I’m currently doing CAB201 (hello coding) and IGB283 (death by math) so I have a basic understanding of arrays and loops, but it was really cool to hear how important they are and can be used in gaming, it kind of boosted my efforts for success in those classes.

I continued on to part 3 of Fullerton’s book this week, I made my way through chapter 12 over a couple of nights. In this chapter Fullerton explored team structures and the roles that individuals can play in the hierarchy. I read about the specifics and various dynamics of publishers and developers, and learnt a lot more about these relationships and companies, who I only previously knew by name, but had no idea what exactly they do. I found the chapter was really helpful for my thought process with Assignment 3, even though we are only a team of 3, it got me thinking about the various roles I need to play in the team as well as the roles that my group partners could take on. It really opened my eyes up to how much is required of game developers, whether it is an indie project or a ‘AAA’ title.

References:

Image retrieved from:

https://i.chzbgr.com/full/9340630784/h357E9FA4/text-when-you-write-10-lines-of-code-without-searching-on-google-itaint-much-but-its-honest-work

0 notes

Text

Unite 2018 report

Introduction

A few Wizcorp engineers participated in Unite Tokyo 2018 in order to learn more about the future of Unity and how to use it for our future projects. Unite Tokyo is a 3-day event held by Unity in different major cities, including Seoul, San Francisco and Tokyo. It takes the form of conferences made by various Unity employees around the globe, where they give an insight on some existing or future technologies and teach people about them. You can find more information about Unite here.

In retrospective, here is a summary of what we’ve learned or found exciting, and that could be useful for the future of Wizcorp.

Introduction first day

The presentation on ProBuilder was very interesting. It showed how to quickly make levels in a way similar to Tomb Raider for example. You can use blocks, slopes, snap them to grid, quickly add prefabs inside and test all without leaving the editor, speeding up the development process tremendously.

They made a presentation on ShaderGraph. You may already be aware about it, but in case you’re not, it’s worth checking it out.

They talked about the lightweight pipeline, which provides a new modular architecture to Unity, in the goal of getting it to run on smaller devices. In our case, that means that we could get a web app in something as little as 72 kilobytes! If it delivers as expected (end of 2018), it may seriously compromise the need to stick to web technologies.

They showed a playable web ad that loads and plays within one second over wifi. It then drives the player to the App Store. They think that this is a better way to advertise your game.

They have a new tool set for the automotive industry, allowing to make very good looking simulations with models from real cars.

They are making Unity Hack Week events around the globe. Check that out if you are not aware about it.

They introduced the Burst compiler, which aims to take advantage of the multi-core processors and generates code with math and vector floating point units in mind, optimizing for the target hardware and providing substantial runtime performance improvements.

They presented improvements in the field of AR, typically with a game that is playing on a sheet that you’re holding on your hand.

Anime-style rendering

They presented the processes that they use in Unity to approach as close as possible Anime style rendering, and the result was very interesting. Nothing is rocket science though, it includes mostly effects that you would use in other games, such as full screen distortion, blur, bloom, synthesis on an HDR buffer, cloud shading, a weather system through usage of fog, skybox color config and fiddling with the character lighting volume.

Optimization of mobile games by Bandai Namco

In Idolmaster, a typical stage scene has 15k polygons only, and a character has a little more than that. They make the whole stage texture fit on a 1024x1024 texture for performance.

For post processing, they have DoF, bloom, blur, flare and 1280x720 as a reference resolution (with MSAA).

The project was started as an experiment in April of 2016, then was started officially in January of 2017, then released on June 29th of the same year.

They mentioned taking care about minimizing draw calls, calls to SetPassCall(DrawCall).

They use texture atlases with index vertex buffers to reduce memory and include performance.

They used the snapdragon profiler to optimise for the target platforms. They would use an approach where they try, improve, try again and then stop when it’s good enough.

One of the big challenges was to have lives with 13 people (lots of polys / info).

Unity profiling and performance improvements

This presentation was made by someone who audits commercial games and gives them support on how to improve the performance or fix bugs.

http://github.com/MarkUnity/AssetAuditor

Mipmaps add 33% to texture size, try to avoid.

Enabling read/write in a texture asset always adds 50% to the texture size since it needs to remain in main memory. Same for meshes.

Vertex compression (in player settings) just uses half precision floating points for vertices.

Play with animation compression settings.

ETC Crunch textures are decrunched on the CPU, so be careful about the additional load.

Beware about animation culling: when offscreen, culled animations will not be processed (like disabled), and with non-deterministic animations this means that if disabled, when it’s enabled again, it will have to be computed for all the time where it was disabled, which may create a huge CPU peak (can happen when disabling and then re-enabling an object too).

Presentation of Little Champions

Looks like a nice game.

Was started on Unity 5.x and was then ported on to Unity 2017.x.

They do their own custom physics processes, by using WaitForFixedUpdate from within FixedUpdate. The OnTriggerXXX and OnCollisionXXX handlers are called afterwards.

They have a very nice level editor for iPad that they used during development. They say it was the key to creating nice puzzle levels, to test them quickly, fix and try again, all from the final device where the game is going to be run on.

Machine learning

A very interesting presentation that showed how to teach a computer to play a simple Wipeout clone. It was probably the simplest you could get it (since you only play left or right, and look out for walls using 8 ray casts.

I can enthusiastically suggest that you read about machine learning yourself, since there’s not really room for a full explanation of the concepts approached there in this small article. But the presenter was excellent.

Some concepts:

You have two training methods: one is reinforcement learning (where you learn through rewards, trial and error, super-speed simulation, so that the agent becomes “mathematically optimal” at task) and one is imitation learning (like humans, learning through demonstrations, without rewards, requiring real-time interaction).

You can also use cooperative agents (one brain -- the teacher, and two agents -- like players, or hands -- playing together towards a given goal).

Learning environment: Agent <- Brain <- Academy <- Tensorflow (for training AIs).

Timeline

Timeline is a plugin for Unity that is designed to create animations that manipulate the entire scene based on time, a bit like Adobe Premiere™.

It consists of tracks, with clips which animate properties (a bit like the default animation system). It’s very similar but adds a lot of features that are more aimed towards creating movies (typically for cut scenes). For example, animations can blend among each other.

The demo he showed us was very interesting, it used it to create a RTS game entirely.

Every section would be scripted (reaction of enemies, cut scenes, etc.) and using conditions the track head would move and execute the appropriate section of scripted gameplay.

He also showed a visual novel like system (where input was waited on to proceed forward).

He also showed a space shooter. The movement and patterns of bullet, enemies, then waves and full levels would be made into tracks, and those tracks would be combined at the appropriate hierarchical level.

Ideas of use for Timeline: rhythm game, endless runner, …

On a personal note I like his idea: he gave himself one week to try creating a game using as much as possible this technology so that he could see what it’s worth.

What was interesting (and hard to summarize in a few lines here, but I recommend checking it out) is that he uses Timeline alternatively to dictate the gameplay and sometimes for the opposite. Used wisely it can be a great game design tool, to quickly build a prototype.

Timeline is able to instantiate objects, read scriptable objects and is very extensible.

It’s also used for programmers or game designers to quickly create the “scaffoldings” of a scene and give that to the artists and designers, instead of having them to guess how long each clip should take, etc.

Another interesting feature of Timeline is the ability to start or resume at any point very easily. Very handy in the case of the space shooter to test difficulty and level transitions for instance.

Suggest downloading “Default Playables” in the Asset Store to get started with Timeline.

Cygames: about optimization for mid-range devices

Features they used

Sun shaft

Lens flare (with the use of the Unity collision feature for determining occlusion, and it was a challenge to set colliders properly on all appropriate objects, including for example the fingers of a hand)

Tilt shift (not very convincing, just using the depth information to blur in post processing)

Toon rendering

They rewrote the lighting pipeline rendering entirely and compacted various maps (like the normal map) in the environment maps.

They presented where ETC2 is appropriate over ETC, which is basically that it reduces color banding, but takes more time to compress at the same quality and is not supported on older devices, and this was why they chose to not use it until recently.

Other than that, they mentioned various techniques that they used on the server side to ensure a good framerate and responsiveness. Also they mentioned that they reserved a machine with a 500 GB hard drive just for the Unity Cache Server.

Progressive lightmapper

The presentation was about their progress on the new lightmapper engine from which we already got a video some time ago (link below). This time, the presenter did apply that to a small game that he was making with a sort of toon-shaded environment. He showed what happens with the different parameters and the power of the new lighting engine.

A video: https://www.youtube.com/watch?v=cRFwzf4BHvA

This has to be enabled in the Player Settings (instead of the Enlighten engine).

The big news is that lights become displayed in the editor directly (instead of having to start the game, get Unity to bake them, etc.).

The scene is initially displayed without lights and little by little as they become available textures are updated with baked light information. You can continue to work meanwhile.

Prioritize view option: bakes what's visible in the camera viewport view first (good for productivity, works just as you’d expect it).

He explained some parameters that come into action when select the best combination for performance vs speed:

Direct samples -> simply vectors from a texel (pixel on texture) to all the lights, if it finds a light it's lit, if it's blocked it's not lit.

Indirect samples: they bounce (e.g. emitted from ground, then bounces on object, then on skybox).

Bounces: 1 should be enough on very open scenes, else you might need more (indoor, etc.).

Filtering smoothes out the result of the bake. Looks cartoonish.

They added the A-Trous blur method (preserves edges and AO).

Be careful about UV charts, which controls how Unity divides objects (based on their normal, so each face of a cube would be in a different UV chart for example), and stops at the end of a chart, to create a hard edge. More UV maps = more “facetted” render (like low-poly). Note that for a big number of UV maps, the object will become round again, because the filtering will blur everything.

Mixed modes: normally lights are either realtime or baked.

3 modes: subtractive (subtract shadows with a single color; can appear out of place), shadowmask: bake into separate lightmaps, so that we can recolor them; still fast and flexible, and the most expensive one where all is done dynamically (useful for the sunlight cycle for example), and distance shadowmask uses dynamic only for objects close to the camera, else baked lightmaps.

The new C# Job system

https://unity3d.com/unity/features/job-system-ECS ← available from Unity 2018.1, along with the new .NET 4.x.

They are slowly bringing concepts from entity / component into Unity.

Eventually they’ll phase out the GameObject, which is too central and requires too much stuff to be mono-threaded.

They explain why they made the choice

Let’s take a list of GameObject’s each having a Transform, a Collider and a RigidBody. Those parts are laid out in the memory sequentially, object per object. A Transform is actually a lot of properties, so accessing only a few of the properties of a Transform in many objects (like particles) will be inefficient for cache accesses.

With the entity/component system, you need to request for the members that you are accessing, and it can be optimized for that. It can also be multi-threaded properly. All that is combined with the new Burst compiler, which generates more performant code based on the hardware.

Entities don't appear in the hierarchy, like Game Objects do.

In his demo, he manages to display 80,000 snowflakes in the editor instead of 13,000.

Here is some example code:

public struct SnowflakeData: IComponentData { public float FallSpeedValue; public float RotationSpeedValue; } public class SnowflakeSystem: JobComponentSystem { private struct SnowMoveJob: IJobProcessComponentData { public float DeltaTime; public void Execute(ref Position pos, ref Rotation pos, ref SnowflakeData data) { pos.Value.y -= data.FallSpeedValue * DeltaTime; rot.Value = math.mul(math.normalize(rot.Value), math.axisAngle(math.up(), data.RotationSpeedValue * DeltaTime)); } } protected override JobHandle OnUpdate(JobHandle inputDeps) { var job = new SnowMoveJob { DeltaTime = Time.DeltaTime }; return job.Schedule(this, 64, inputDeps); } } public class SnowflakeManager: MonoBehaviour { public int FlakesToSpawn = 1000; public static EntityArchetype SnowFlakeArch; [RuntimeInitializeOnLoadMethod(RuntimeInitializeLoadType.BeforeSceneLoad)] public static void Initialize() { var entityManager = World.Active.GetOrCreateManager(); entityManager.CreateArchetype( typeof(Position), typeof(Rotation), typeof(MeshInstanceRenderer), typeof(TransformMatrix)); } void Start() { SpawnSnow(); } void Update() { if (Input.GetKeyDown(KeyCode.Space)) { SpawnSnow(); } } void SpawnSnow() { var entityManager = World.Active.GetOrCreateManager(); NativeArray snowFlakes = new NativeArray(FlakesToSpawn, Allocator.Temp); // temporary allocation, so that we can dispose of it afterwards entityManager.CreateEntity(SnowFlakeArch, snowFlakes); for (int i = 0; i < FlakesToSpawn; i++) { entityManager.SetComponentData(snowFlakes[i], new Position { Value = RandomPosition() }); // RandomPosition made by the presenter entityManager.SetSharedComponentData(snowFlakes[i], new MeshInstanceRenderer { material = SnowflakeMat, ... }); entityManager.AddComponentData(snowFlakes[i], new SnowflakeData { FallSpeedValue = RandomFallSpeed(), RotationSpeedValue = RandomFallSpeed() }); } // Dispose of the array snowFlakes.Dispose(); // Update UI (variables made by the presenter) numberOfSnowflakes += FlakesToSpawn; EntityDisplayText.text = numberOfSnowflakes.ToString(); } }

Conclusion

We hope that you enjoyed reading this little summary of some of the presentations which we attended to.

As a general note, I would say that Unite is an event aimed at hardcore Unity fans. There is some time for networking with Unity engineers between the sessions (who come from all around the world), and not many beginners. It can be a good time to extend professional connections with (very serious) people from the Unity ecosystem, and not great for recruiting for instance. But you have to go for it and make it happen. By default the program will just have you follow sessions one after each other with value that is probably similar to what you would have by watching official presentations in a few weeks or months from now on YouTube. I’m a firm believer that socializing is way better than watching videos from home, so you won’t get me saying that it’s a waste of time, but if you are to send people there the best is when they are proactive and passionate themselves about Unity. If they just use it at work, I feel that the value is rather small, and I would even dare that it’s a bit a failure from the Unity team, as it can be hard to see who they are targeting.

There is also the Unite party, which you have to book way before, that may improve value for networking, but none of us could attend.

1 note

·

View note

Text

AR with Unity! | Image Recognition Using AR Foundation

Introduction to AR Foundation:

Augmented Reality can be used in Unity through AR Foundation. This interface makes Unity developer work easy. To use this package you also need some plugins which are mentioned below.

AR Foundation includes core features from ARKit, ARCore, Magic Leap, and HoloLens, as well as unique Unity features to build robust apps that are ready to ship to internal stakeholders or on any app store. This framework enables you to take advantage of all of these features in a unified workflow.

AR Foundation lets you take currently unavailable features with you when you switch between AR platforms.

The AR-related subsystems are defined in the AR Subsystems package. These APIs are in the UnityEngine.Experimental.XR namespace, and consist of a number of Subsystems.

AR Foundation uses Monobehaviours and APIs to deal with devices that support the following concepts :

Device tracking: track the device’s position and orientation in physical space.

Plane detection: detect horizontal and vertical surfaces.

Point clouds, also known as feature points.

Anchor: an arbitrary position and orientation that the device tracks.

Light estimation: estimates for average color temperature and brightness in physical space.

Environment probe: a means for generating a cube map to represent a particular area of the physical environment.

Face tracking: detect and track human faces.

2D image tracking: detect and track 2D images.

3D object tracking: detect 3D objects.

Meshing: generate triangle meshes that correspond to the physical space.

Body tracking: 2D and 3D representations of humans recognized in physical space.

Collaborative participants: track the position and orientation of other devices in a shared AR experience.

Human segmentation and occlusion: apply distance to objects in the physical world to rendered 3D content, which achieves a realistic blending of physical and virtual objects.

Raycast: queries physical surroundings for detected planes and feature points.

Pass-through video: optimized rendering of mobile camera image onto the touch screen as the background for AR content.

Session management: manipulation of the platform-level configuration is automatically when AR Features are enabled or disabled.

(Above concepts reference are taken from: https://docs.unity3d.com/Packages/[email protected]/manual/index.html)

Feature Support per Platform:

Prerequisites

Unity 2019 version or above.

Android SDK level 24 and above.

Android device which has Android 7.0 and above.

ARCore XR plugin:

Currently, [email protected] preview is available. By adding this plugin it enables the use of ARCore support via unity multi-platform XR API Supported Features are,

Background Rendering

Horizontal Planes

Depth Data

Anchors

Hit Testing

For more current version information visit: https://docs.unity3d.com/Packages/[email protected]/manual/index.html

ARKit XR plugin:

Currently, [email protected] preview is available. By adding this plugin it enables the use of ARKit support via unity multi-platform XR API Supported Features are,

Efficient Background Rendering

Horizontal Planes

Depth Data

Anchors

Hit Testing

Face Tracking

Environment Probes

For more current version information visit: https://docs.unity3d.com/Packages/[email protected]/manual/index.html

Image Recognition Using AR Foundation:

Setup

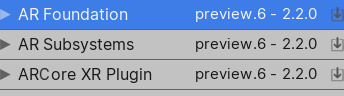

To install AR Foundation you need to go window -> package manager, Press The Advance Button, and select show preview packages after that you will need to install AR Foundation version 2.2.0-preview.06, We also require to download the ARCore XR Plugin, ARKit XR Plugin the same way the version should be,

ARCore XR Plugin(For Android) : 2.2.0-preview.06 ARKit XR Plugin(For IOS) : 2.2.0-preview.06

Note: make sure the SDK you are using are updated beforehand. (The above-mentioned version are working for me that why I used these versions.)

Player Settings

In this player, the setting adds the company name and package name you want to give. Then enable AutoGraphic API. Then make Minimum API level to 24 and Target API level to highest.

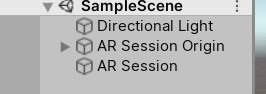

Hierarchy

Add AR Session, AR Session Origin from pressing right-click on hierarchy go in XR tab and add them.

Then inside AR Session origin is AR camera give it the tag of the Main Camera.

Inspector

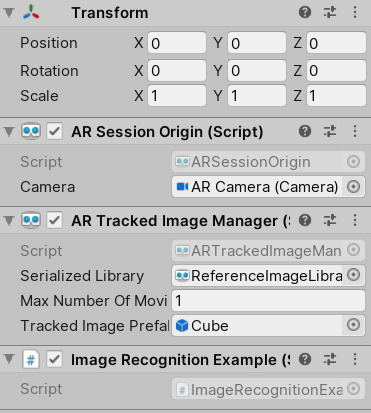

Click on AR Session origin and add AR Tracked Image Manager component.

We now need a Serialized library and image prefab.

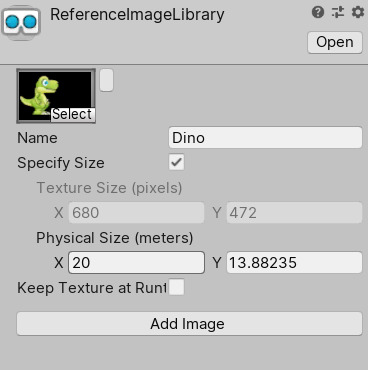

Reference Image Library

Go in project tab Press right click Create – >XR -> ReferenceImageLibrary. This is a Serialized object, so we need to populate it.

After adding the data, give reference to the AR Tracked Image Manager component.

Prefab

Create a cube in the scene adjust it and drag it to the project tab and give reference to the AR Tracked Image Manager component.

Script

Create a script for recognition of image and what you want to do with it through AR Tracked Image Manager component.

Add the code written in the script above.

Build

Press CTRL + B to build your app, make sure you add the scene.

Now it’s completed you can open the image which you add in the reference library and you can see the prefab you added AR Tracked Image Manager component

Video

For the video click on: https://youtu.be/kYZiPnGhNsM

Conclusion:

Hence, Image Recognition is one of the features which are helpful in AR Related Applications, and hence it will be very useful in a Games.

This was just a basic setup to start working with AR Foundation in unity. There are many more features available for user-interactive gaming. Image Recognition is an example of engaging gaming experience.

This tutorial can be used to work with any game or application.

0 notes

Text

Dev Log 4/20 - UI Changes and the Four Corners Minigame - Ian

Since the project has started I’ve accomplished three major things:

- Restructuring and importing the Combat System that was created in a previous CS 499 project.

- Modifications to the combat interface.

- Creation of the Four Corners minigame.

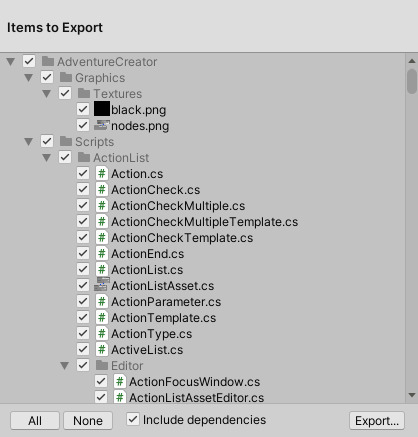

The first thing I did was package (only the essential) files, scripts, and objects that were used in the combat scene by checking the “Include dependencies” (see screenshot below) option when exporting Unity Package. I then took the exported Unity package and imported it and fixed any errors that were caused from taking what the previous combat team had did, which was in a different version of Unity, and putting that into the new working Unity project.

My second task was the improvements to the user interface. For this Dr. Byrd and Harrison requested that a “box” be added at the bottom of the screen similar the original Final Fantasy 7′s user interface - see screenshot. The reason why they wanted the box was because it was difficult to tell what was going on in the combat scene while the minigame was active. Essentially, the minigame covered the majority of the middle of your screen, making it so you couldn’t see health or when the enemy is “auto attacking,” which happens at set intervals.

To do this I simply added a UI panel object and had it cover a desired amount of the screen - see screenshot.

After showing this to Dr. Byrd and Dr. Harrison we agreed that it was a bit jarring. Instead, we decided that the minigame should be localized to this bottom panel, but the bottom panel should not be visible. This is how it currently works - see screenshot below. Also, I made some minor improvements by moving the health bars over the player’s characters, and shifted the characters up slightly, making their bodies more visible in combat.

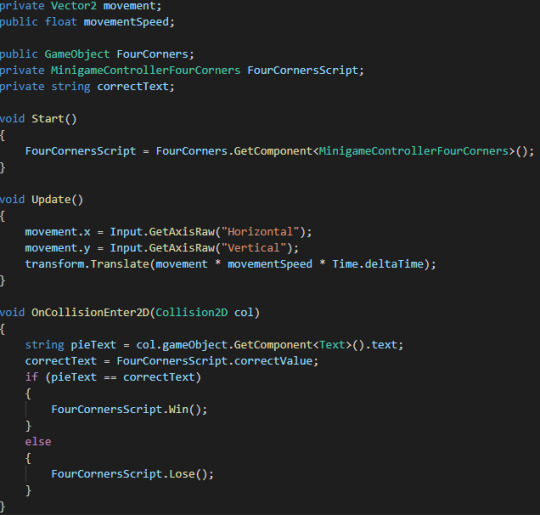

The third and most involved of the things I implemented was the implementation of the Four Corner’s minigame, The idea for the minigame is that this should be the evaluative minigame, it takes what the other minigames or parts of the game have taught the player and it directly quizzes their understanding. Essentially, the player controls the square in the middle of the panel, and they have to navigate their square to the PIE word which matches the given meaning before the timer runs out.

Using the minigame structure that Vince implemented I began creating a minigame prefab that would be instantiated whenever the minigame is to be played. I did this by creating a UI Canvas object in the MinigameTestScene scene and filling it with six image elements, one in each corner and two in the center. Each corner image represents a PIE word that the player could guess. Three of which are incorrect and one of which is correct. One of the images in the center is the player, and the other is the meaning of the correct word. (See above screenshot of UI). Each image has a text element child, which will be set to the word/meaning of the word. I also added a slider object which is used to keep track of the time remaining.

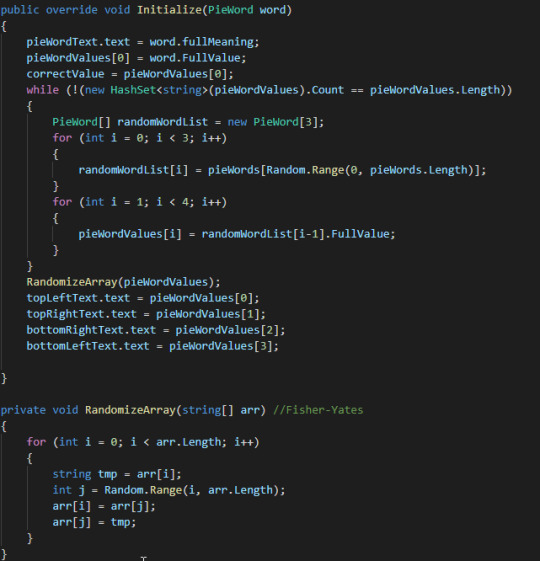

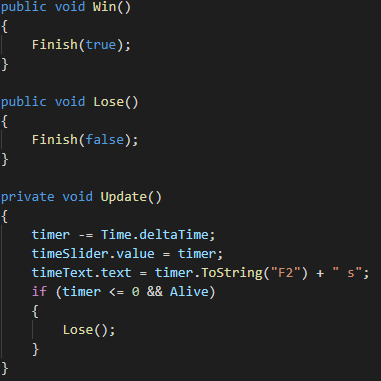

In script I used the MinigameController class to construct a controller script for my minigame, I called it MinigameControllerFourCorners.cs. I defined the given abstract Initialize method, which takes the correct PIE word as a parameter (the word is given when the controller is called). I took this given PIE word and I set its meaning to a text element in the center of the screen. I also set the correct word to the first element in an array of words, randomWordList, and set it to a public variable which stores the correct value. The public variable is referenced by another script, the array is to be randomized later. I then generate three random unique PIE words and stored them randomWordList. I then randomized the array and set a each element of the array to a text object located in each of the four corners. See screenshot.

Similarly, I implemented a Win() and a Lose() function which is used by the player object. And I implemented a simple timer in the update method.

Finally, I implemented a script to control the player, called PlayerController.cs. This is used by the player object to control movement and collision detection. Each of the objects located at the corners have collider components. If the player collides with one of these objects I use the OnCollisionEnter2D(..) Unity function to manipulate this collision. Essentially, PlayerController.cs references MinigameControllerFourCorners.cs and compares the value of the correctText (set in minigame controller) and the text value of the element in which the player collides with. If they match, the player wins, if they don’t the player fails. I also implemented simple movement using Input.GetAxisRaw and transform.Translate.

words: 800

0 notes

Text

300+ TOP UNITY 3D Interview Questions and Answers

UNITY 3D Interview Questions for freshers experienced :-

1. What is Unity 3D? Unity 3D is a powerful cross-platform and fully integrated development engine which gives out-of-box functionality to create games and other interactive 3D content. 2. What are the characteristics of Unity3D? Characteristics of Unity is It is a multi-platform game engine with features like ( 3D objects, physics, animation, scripting, lighting etc.) Accompanying script editor MonoDevelop (win/mac) It can also use Visual Studio (Windows) 3D terrain editor 3D object animation manager GUI System Many platforms executable exporter Web player/ Android/Native application/Wii In Unity 3D, you can assemble art and assets into scenes and environments like adding special effects, physics and animation, lighting, etc. 3. What is important components of Unity 3D? Some important Unity 3D components include Toolbar: It features several important manipulation tools for the scene and game windows Scene View: It is a fully rendered 3 D preview of the currently open scene is displayed and enables you to add, edit and remove GameObjects Hierarchy: It displays a list of every GameObject within the current scene view Project Window: In complex games, project window searches for specific game assets as needed. It explores the assets directory for all textures, scripts, models and prefabs used within the project Game View: In unity you can view your game and at the same time make changes to your game while you are playing in real time. 4. What is Prefabs in Unity 3D? Prefab in Unity 3D is referred for pre-fabricated object template (Class combining objects and scripts). At design time, a prefab can be dragged from project window into the scene window and added the scene's hierarchy of game objects. If desired the object then can be edited. At the run time, a script can cause a new object instance to be created at a given location or with a given transform set of properties. 5. What is the function of Inspector in Unity 3D? The inspector is a context-sensitive panel, where you can adjust the position, scale and rotation of Game Objects listed in Hierarchy panel. 6. What's the best game of all time and why? The most important thing here is to answer relatively quickly, and back it up. One of the fallouts of this question is age. Answering "Robotron!" to a 20-something interviewer might lead to a feeling of disconnect. But sometimes that can be good. It means you have to really explain why it's the best game of all time. Can you verbally and accurately describe a game to another person who has never played it? You'll rack up some communication points if you can. What you shouldn't say is whatever the latest hot game is, or blatantly pick one that the company made (unless it's true and your enthusiasm is bubbling over). Be honest. Don't be too eccentric and niche, and be ready to defend your decision. 7. Do you have any questions regarding us? Yes. Yes, you do have questions. Some of your questions will have been answered in the normal give-and-take of conversation, but you should always be asked if you have others (and if not, something's wrong). Having questions means you're interested. Some questions are best directed to HR, while others should be asked of managers and future co-workers. Ask questions that show an interest in the position and the long-term plans of the company. For some ideas, see "Questions You Should Ask in an Interview," below. 8. What are the characteristics of Unity3D Characteristics of Unity is It is a multi-platform game engine with features like ( 3D objects, physics, animation, scripting, lighting etc.) Accompanying script editor MonoDevelop (win/mac) It can also use Visual Studio (Windows) 3D terrain editor 3D object animation manager GUI System Many platforms executable exporter Web player/ Android/Native application/Wii In Unity 3D, you can assemble art and assets into scenes and environments like adding special effects, physics and animation, lighting, etc. 9. List out some best practices for Unity 3D Cache component references: Always cache reference to components you need to use your scripts Memory Allocation: Instead of instantiating the new object on the fly, always consider creating and using object pools. It will help to less memory fragmentation and make the garbage collector work less Layers and collision matrix: For each new layer, a new column and row are added on the collision matrix. This matrix is responsible for defining interactions between layers Raycasts: It enables to fire a ray on a certain direction with a certain length and let you know if it hit something Physics 2D 3D: Choose physics engine that suits your game Rigidbody: It is an essential component when adding physical interactions between objects Fixed Timestep: Fixed timestep value directly impacts the fixedupdate() and physics update rate. 10. What do you do on your own time to extend your skills? As a programmer, do you work on home projects? As a designer, do you doodle design ideas or make puzzles? As an artist, do you do portrait work? Having hired many people in the past, one of the things I can speak to with authority is that those people who spend their off time working on discipline-related projects are the ones who are always up on current trends, have new ideas, are most willing to try something new, and will be the ones taking stuff home to tinker with on their own time. Now that shouldn't be expected of everyone, but the sad reality is that there is competition for jobs out there, and those who are prepared to put in the extra work are the ones that are going to be in hot demand. Demonstrating that you learned C# over a weekend because you thought it was cool for prototyping is exactly the kind of thing a programming manager wants to hear. Suddenly your toolset expanded, and not only did it show willingness to do something without being told, it makes you more valuable. The only care to here is to not mention an outside situation that might detract from or compete with your day job.